This repository contains a complete ROS Noetic package for simulating and controlling a Skid-Steer Autonomous Mobile Robot (AMR) using Gazebo and URDF/Xacro.

The project includes robot modeling, Gazebo plugins, teleoperation, and environment setup.

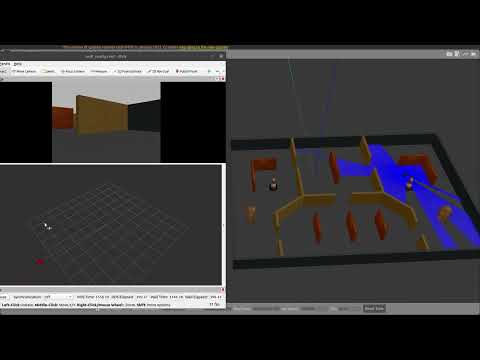

- GIF

- Project Overview

- Repository Structure

- Requirements

- How to Run

- Launch Files

- TF Tree

- ROS Topics

- Demo & Result

- Future Improvements

This project implements a 4-wheel skid-steer mobile robot using ROS1 Noetic. The robot includes:

- 360° LiDAR

- Kinect RGB-D Camera

- Two Ultrasonic Sensors

- 4-Wheel Skid-Steer Drive System

- Gazebo Simulation

- SLAM using gmapping

The goal is to build a modular simulation-ready platform for mapping, perception, and future navigation.

AMR_Ros1_skid_steer/

│

├── src/

│

├── skid_steering_pkgs/ # Main robot control package

│ ├── skid_steer_description/ # URDF/Xacro files for robot model

│ │ ├── config/

│ │ ├── meshes/

│ │ ├── launch/

│ │ └── urdf/

│ │ ├── main_skid.xacro # Base link, box above base, front support for ultrasonic

│ │ ├── plugins.gazebo # Plugins for skid steer, LiDAR, Kinect, ultrasonic

│ │ ├── sensors.xacro # LiDAR, Kinect, ultrasonic sensors

│ │ └── wheels.xacro # 4 wheels: f_right, f_left, b_right, b_left

│ │

│ ├── skid_steer_gazebo/

│ │ ├── launch/ # spawn_skid_steer --> run robot in Gazebo & RViz

│ │ └── world/ # Custom Gazebo world

│ │

│ ├── skid_steer_slam/

│ │ ├── launch/

│ │ ├── maps/

│ │ ├── tf/

│ │ └── config/

│ │

│ └── teleop/ # Keyboard teleoperation

│

├── CMakeLists.txt

├── package.xml

├── photos/ # Robot images, world snapshots, output results

├── demo_gazebo/ # Demo simulations

└── README.md| Component | Version |

|---|---|

| Ubuntu | 20.04 |

| ROS | Noetic |

| Gazebo | 11 |

| Python | ≥ 3.8 |

Install teleop package:

sudo apt install ros-noetic-teleop-twist-keyboard1️⃣ Clone the repository

cd ~/catkin_ws/src

git clone https://github.com/A-ibrahim9/AMR_Ros1_skid_steeer.git

cd ..

catkin_make

source devel/setup.bash2️⃣ Run Gazebo simulation

roslaunch skid_steer_gazebo spwan_skid_steer.launch3️⃣ Run SLAM (Mapping)

roslaunch skid_steer_slam skid_gmapping.launch4️⃣ Control the robot (Teleop)

rosrun teleop_twist_keyboard teleop_twist_keyboard.py5️⃣ Save generated map

rosrun map_server map_saver -f my_map- Launches Gazebo + custom world.

- Spawns the robot.

- Opens RViz.

- Send urdf to param server

- Send robot states to tf

- Runs Gmapping SLAM.

- Uses LiDAR for mapping.

- Opens RViz for live map visualization.

- Started by building the main robot model using URDF/Xacro.

- Added the base link, chassis, wheels, and mounting frame.

- Integrated sensors:

- LiDAR → for mapping & obstacle detection

- Kinect/Depth Camera → 3D perception

- Ultrasonic Sensors → short-range proximity

- Added Gazebo plugins:

- Skid-steer drive plugin

- LiDAR plugin

- Depth camera plugin

- Ultrasonic sensor plugin

- Created a custom Gazebo world.

- Added launch files to:

- Load URDF

- Spawn the robot

- Start Gazebo + RViz

- Configured mapping nodes (Gmapping).

- Tuned LiDAR settings for better map quality.

- Added map saving/loading functionality.

- Added keyboard teleop node for manual control during testing.

- Published Topics

| Topic | Message Type | Description |

|---|---|---|

| /odom | nav_msgs/Odometry | Odometry from Gazebo skid-steer plugin |

| /joint_states | sensor_msgs/JointState | Wheel joint state feedback |

| /scan | sensor_msgs/LaserScan | 360° LiDAR scan data |

| /camera/color/image_raw | sensor_msgs/Image | RGB image from Kinect camera |

| /camera/depth/image_raw | sensor_msgs/Image | Depth image from Kinect |

| /camera/color/camera_info | sensor_msgs/CameraInfo | Camera intrinsics |

| /ultrasonic_front | sensor_msgs/Range | Front ultrasonic distance |

| /ultrasonic_rear | sensor_msgs/Range | Rear ultrasonic distance |

- Subscribed Topics

| Topic | Message Type | Description |

|---|---|---|

| /cmd_vel | geometry_msgs/Twist | Velocity commands (teleop or nodes) |

| Gazebo Simulation | Rviz Visualization |

| My World | LiDAR Data |

| Kinect Data | Ultrasonic Data |

The following modules are planned and will be added later:

- AMCL Localization

- Full Navigation Stack (Global + Local Planners)

- Sensor Fusion (IMU, wheel encoders)