Abstract

We report an updated analysis of the radius, mass, and heated surface regions of the massive pulsar PSR J0740+6620 using Neutron Star Interior Composition Explorer (NICER) data from 2018 September 21 to 2022 April 21, a substantial increase in data set size compared to previous analyses. Using a tight mass prior from radio-timing measurements and jointly modeling the new NICER data with XMM-Newton data, the inferred equatorial radius and gravitational mass are  km and

km and  M⊙, respectively, each reported as the posterior credible interval bounded by the 16% and 84% quantiles, with an estimated systematic error ≲ 0.1 km. This result was obtained using the best computationally feasible sampler settings providing a strong radius lower limit but a slightly more uncertain radius upper limit. The inferred radius interval is also close to the

M⊙, respectively, each reported as the posterior credible interval bounded by the 16% and 84% quantiles, with an estimated systematic error ≲ 0.1 km. This result was obtained using the best computationally feasible sampler settings providing a strong radius lower limit but a slightly more uncertain radius upper limit. The inferred radius interval is also close to the  km obtained by Dittmann et al., when they require the radius to be less than 16 km as we do. The results continue to disfavor very soft equations of state for dense matter, with R < 11.15 km for this high-mass pulsar excluded at the 95% probability. The results do not depend significantly on the assumed cross-calibration uncertainty between NICER and XMM-Newton. Using simulated data that resemble the actual observations, we also show that our pipeline is capable of recovering parameters for the inferred models reported in this paper.

km obtained by Dittmann et al., when they require the radius to be less than 16 km as we do. The results continue to disfavor very soft equations of state for dense matter, with R < 11.15 km for this high-mass pulsar excluded at the 95% probability. The results do not depend significantly on the assumed cross-calibration uncertainty between NICER and XMM-Newton. Using simulated data that resemble the actual observations, we also show that our pipeline is capable of recovering parameters for the inferred models reported in this paper.

Original content from this work may be used under the terms of the Creative Commons Attribution 4.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Introduction

Determination of the masses and radii of a set of neutron stars (NSs) can be used to infer properties of the high-density matter in their cores. This is possible due to the one-to-one mapping between the equation of state (EOS) and the mass–radius dependence of the NS (see, e.g., Lattimer & Prakash 2016; Baym et al. 2018). One way to infer mass and radius is to model the X-ray pulses produced by hot regions on the surface of a rapidly rotating NS including relativistic effects (see, e.g., Watts et al. 2016; Bogdanov et al. 2019, and references therein). For example, this technique has been applied in analyzing the data from NASA's Neutron Star Interior Composition Explorer (NICER; Gendreau et al. 2016) for rotation-powered millisecond pulsars. Their thermal emission is dominated by surface regions heated by the bombardment of charged particles from a magnetospheric return current (see, e.g., Ruderman & Sutherland 1975; Arons 1981; Harding & Muslimov 2001). Results for two sources have been released (Miller et al. 2019, 2021; Riley et al. 2019, 2021a; Salmi et al. 2022, hereafter M21, R21, and S22, respectively; Vinciguerra et al. 2024), providing useful constraints for dense matter models (see, e.g., M21; Raaijmakers et al. 2021; Biswas 2022; Annala et al. 2023; Takátsy et al. 2023). These results have also triggered studies on the magnetic field geometries and how nonantipodal they can be (see, e.g., Bilous et al. 2019; Chen et al. 2020; Kalapotharakos et al. 2021; Carrasco et al. 2023).

In this work, we use a new NICER data set (with increased exposure time) to analyze the high-mass pulsar PSR J0740+6620, previously studied in M21, R21, and S22. In those works, the NS mass had a tight prior from radio timing (2.08 ± 0.07 M⊙; Fonseca et al. 2021), and the NS radius was inferred to be, using both NICER and XMM-Newton data,  km in R21 and

km in R21 and  km in M21. However, the results were slightly sensitive to the inclusion of the XMM-Newton data (used to better constrain the phase-averaged source spectrum and hence—indirectly—the NICER background) and assumptions made in the cross-calibration between the two instruments. In S22, the use of NICER background estimates (such as “3C50” from Remillard et al. 2022) was shown to yield results similar to the joint NICER and XMM-Newton analysis, giving confidence in the use of XMM-Newton data as an indirect method of background constraint.

km in M21. However, the results were slightly sensitive to the inclusion of the XMM-Newton data (used to better constrain the phase-averaged source spectrum and hence—indirectly—the NICER background) and assumptions made in the cross-calibration between the two instruments. In S22, the use of NICER background estimates (such as “3C50” from Remillard et al. 2022) was shown to yield results similar to the joint NICER and XMM-Newton analysis, giving confidence in the use of XMM-Newton data as an indirect method of background constraint.

In this paper we use a new NICER data set with more than 1 Ms additional exposure time and more than 0.5 million additional observed counts, an ∼90% increase in the counts, compared to the data sets used in M21 and R21. This is expected to reduce the uncertainties in the inferred NS parameters (Lo et al. 2013; Psaltis et al. 2014), and we explore whether this is indeed the case. We also look in detail at the influence of sampler settings on the credible intervals.

The remainder of this paper is structured as follows. In Section 2.1, we introduce the new data set used for PSR J0740+6620. In Section 2, we summarize the modeling procedure, and in Section 3 we present the results for the updated analysis. We discuss the implications of the results in Section 4 and conclude in Section 5. Inference results using simulated data, resembling our new data, are shown in Appendix A.

2. Modeling Procedure

The modeling procedure is largely shared with that of Bogdanov et al. (2019, 2021), Riley et al. (2019), R21, and S22. We use the X-ray Pulse Simulation and Inference 11 (X-PSI; Riley et al. 2023) code, with versions ranging from v0.7.10 to v1.2.1 12 for inference runs (v1.2.1 used for the headline results), and v2.2.1 for producing the figures. Complete information of each run, including the exact X-PSI version, data products, posterior sample files, and all the analysis files can be found in the Zenodo repository at doi:10.5281/zenodo.10519473. In the next sections we summarize the modeling procedure and focus on how it differs from that used in previous work.

2.1. X-Ray Event Data

The NICER X-ray event data used in this work were processed with a similar procedure as the previous data reported in Wolff et al. (2021) and used in M21 and R21, but with some notable differences (note that a completely different 3C50 procedure was applied in S22). The new data were collected from a sequence of exposures, in the period 2018 September 21–2022 April 21 (observation IDs, hereafter obsIDs, 1031020101 through 5031020445), whereas the period of the previous data set began on the same start date but ended on 2020 April 17 (using the obsIDs shown in Wolff et al. 2021). After filtering the data (described below), this resulted in 2.73381 Ms of on-source exposure time, compared to the previous 1.60268 Ms.

The filtering procedure differed slightly from that used in earlier work. First, similarly to the previous work, we rejected data obtained at low cut-off rigidities of the Earth’s magnetic field (COR_SAX < 2 GeV c–1

13

) to minimize high-energy particle interactions indistinguishable from X-ray events, and we excluded all the events from the noisy detector DetID 34 and the events from DetID 14 when it had a count rate greater than 1.0 counts per second (cps) in 8.0 s bins. We also cut all 2 s bins with total count rate larger than 6 cps to remove generally noisy time intervals. However, here the previously used sorting method of good time intervals (GTIs) from Guillot et al. (2019) and filtering based on the angle between PSR J0740+6620 and the Sun were not applied. Instead, a maximum “undershoot rate” (i.e., detector reset rate) of 100 cps per detector was imposed to produce a cleaned event list with less contamination from the accumulation of solar optical photons (“optical loading,” up to ∼0.4 keV) typically—but not exclusively—happening at low Sun angles. A test of the event extraction procedure holding all other criteria constant and just varying the maximum undershoot rate from a value of 50 to 200 cps (in increments of 50 cps) gave us four different filtered event lists from the same basic list of obsIDs. Testing each event list for pulsation detection significance using a  test (Buccheri et al. 1983) showed that the pulsar was clearly detected at highest significance for the maximum undershoot rate of 100 cps and thus it is this event list that we settled on for this analysis.

test (Buccheri et al. 1983) showed that the pulsar was clearly detected at highest significance for the maximum undershoot rate of 100 cps and thus it is this event list that we settled on for this analysis.

We note that our new procedure is expected to be less prone to the type of systematic selection bias suggested for the older GTI sorting method in Essick (2022; although even there the effect was only marginal, as discussed in Section 2.1 of S22). This is because the undershoot rates do not correlate with the flux from the optically dim pulsar, and the detection significance is maximized only by selecting from four different data-filtering options.

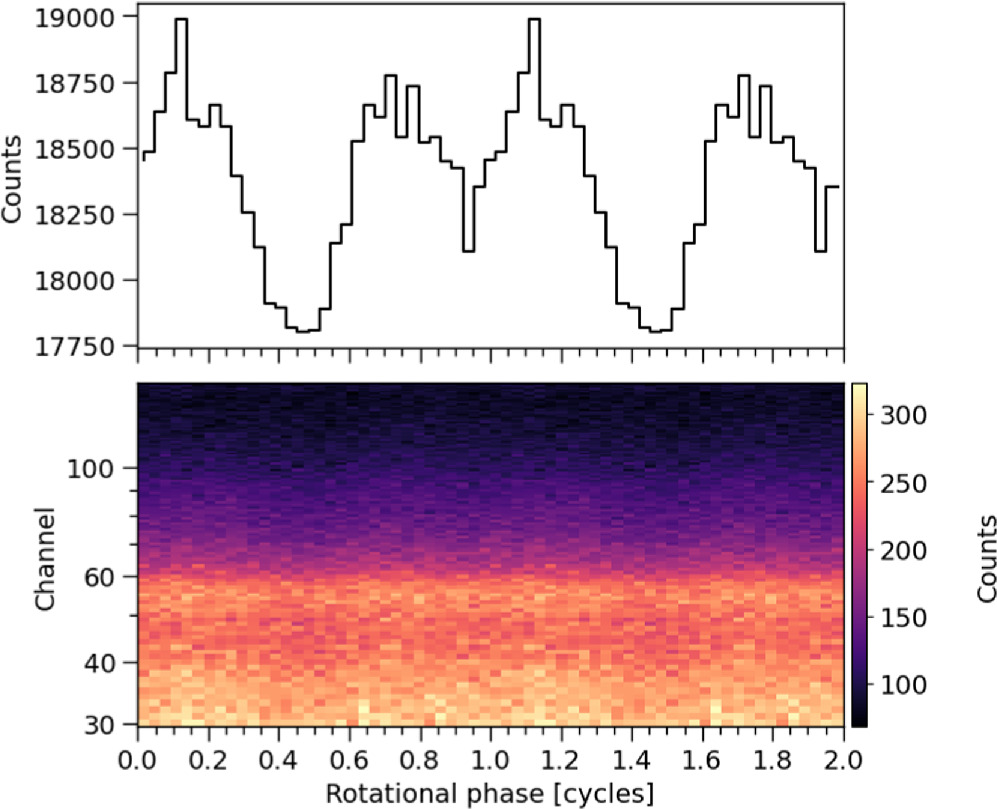

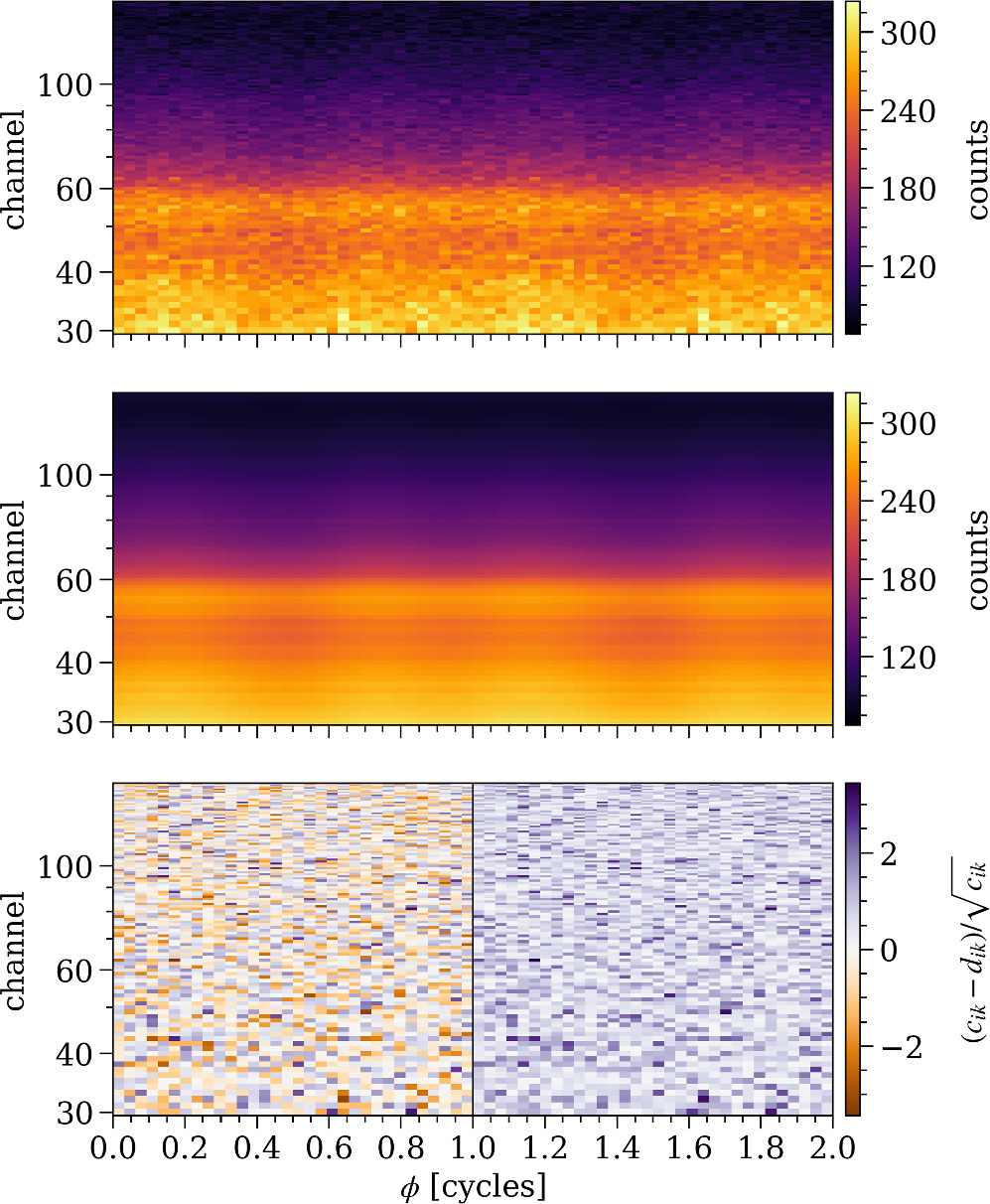

In the pulse profile analysis, we used again the pulse invariant (PI) channel subset [30, 150), corresponding to the nominal photon energy range [0.3, 1.5] keV, as in R21 and S22, unless mentioned otherwise. The number of rotational phase bins in the data is also 32 as before. The data split over two rotational cycles are visualized in Figure 1.

Figure 1. The new phase-folded PSR J0740+6620 event data for two rotational cycles (for clarity). The top panel shows the pulse profile summed over the channels. As in Figure 1 of R21, the total number of counts is given by the sum over all phase–channel pairs (over both cycles). For the modeling all the event data are grouped into a single rotational cycle instead.

Download figure:

Standard image High-resolution imageFor the analyses including XMM-Newton observations, we used the same phase-averaged spectral data and blank-sky observations (for background constraints) as in M21, R21, and S22 with the three EPIC instruments (pn, MOS1, and MOS2). Unless mentioned otherwise, we included the energy channels [57, 299) for pn, and [20, 100) for both MOS1 and MOS2, which are the same choices as in R21 and S22 (although this was not explicitly stated in those papers). M21 made the same choices except for including one more high-energy channel for the pn instrument. The data are visualized in Figures 4 and 16 of R21.

2.2. Instrument Response Models

For the XMM-Newton EPIC instruments we used the same ancillary response files (ARFs) and redistribution matrix files (RMFs) as in R21 and S22. For the NICER events utilized in this study, the calibration version is xti20210707, and the HEASOFT version is 6.30.1 containing NICERDAS 2022-01-17V009. We generated response matrices differently from the previous analyses in that we used a combined response file, 14 which was tailored for the focal plane module (FPM) information now maintained in the NICER FITS event files. Thus, for the analysis in this study, the effective area will reflect the exact FPM exposures resulting in the rescaling of effective area to account for the complete removal of one detector (DetID 34) and the partial removal of another detector (DetID 14) as addressed above. The resulting calibration products are available on Zenodo at doi:10.5281/zenodo.10519473.

When modeling the observed signal, we allowed uncertainty in the effective areas of both NICER and all three XMM-Newton detectors, due the lack of an absolute calibration source. As in R21 and S22, we define energy-independent effective-area scaling factors as

where αNICER and αXMM are the overall scaling factors for NICER and XMM-Newton, respectively (used to multiply the effective area of the instrument), αSH is a shared scaling factor between all the instruments (to simulate absolute uncertainty of the X-ray flux calibration), and  and

and  are telescope-specific scaling factors (to simulate relative uncertainty between the NICER and XMM-Newton calibration). As before, we assume that the factors are identical for pn, MOS1, and MOS2 (which may not be true). In our headline results, we apply the restricted 10.4% uncertainty (Ishida et al. 2011; Madsen et al. 2017; Plucinsky et al. 2017)

15

in the overall scaling factors, as in the exploratory analysis of Section 4.2 in R21 (see also Section 3.3 of S22), which results from assuming a 10% uncertainty in αSH and a 3% uncertainty in the telescope-specific factors. This choice is tighter than the 15% uncertainty used in the main results of R21 (who assumed 10.6% uncertainty in both shared and telescope-specific factors), but is similar to that used in M21 and S22. However we have also explored the effect of different choices for the effective-area scaling factors in Section 3.2.

are telescope-specific scaling factors (to simulate relative uncertainty between the NICER and XMM-Newton calibration). As before, we assume that the factors are identical for pn, MOS1, and MOS2 (which may not be true). In our headline results, we apply the restricted 10.4% uncertainty (Ishida et al. 2011; Madsen et al. 2017; Plucinsky et al. 2017)

15

in the overall scaling factors, as in the exploratory analysis of Section 4.2 in R21 (see also Section 3.3 of S22), which results from assuming a 10% uncertainty in αSH and a 3% uncertainty in the telescope-specific factors. This choice is tighter than the 15% uncertainty used in the main results of R21 (who assumed 10.6% uncertainty in both shared and telescope-specific factors), but is similar to that used in M21 and S22. However we have also explored the effect of different choices for the effective-area scaling factors in Section 3.2.

2.3. Pulse Profile Modeling Using X-PSI

As in previous NICER analyses (e.g., M21; R21; S22), we use the “Oblate Schwarzschild” approximation to model the energy-resolved X-ray pulses from the NS (see, e.g., Miller & Lamb 1998; Nath et al. 2002; Poutanen & Gierliński 2003; Cadeau et al. 2007; Morsink et al. 2007; Lo et al. 2013; AlGendy & Morsink 2014; Bogdanov et al. 2019; Watts 2019). In addition, we use now a corrected BACK_SCAL factor (see Section 3.4 of S22). As before, we use the mass, inclination, and distance priors from Fonseca et al. (2021), interstellar attenuation model TBabs (Wilms et al. 2000, updated in 2016), and the fully ionized hydrogen atmosphere model NSX (Ho & Lai 2001). As shown in Salmi et al. (2023), the assumptions for the atmosphere do not seem to significantly affect the inferred radius of PSR J0740+6620. A larger sensitivity to the atmosphere choices was found in Dittmann et al. (2024), but this could be related to their sampling methodology or their larger prior space, e.g., including NS radii above 16 km. The shapes of the hot emitting regions are again characterized using the single-temperature-unshared (ST-U) model with two circular uniform temperature regions (Riley et al. 2019).

2.4. Posterior Computation

We compute the posterior samples using PyMultiNest (Buchner et al. 2014) and MultiNest (Feroz & Hobson 2008; Feroz et al. 2009, 2019), as in the previous X-PSI analyses. For the headline joint NICER and XMM-Newton results of this work, we use the following MultiNest settings: 4 × 104 live points and a 0.01 sampling efficiency (SE). 16 For the NICER-only analysis, and the exploratory NICER-XMM analysis, we used the same number of live points but SE = 0.1. We discuss sensitivity to sampler settings further in Section 3.

3. Inferences

We start first by presenting the inference results for the updated NICER-only analysis, and then proceed to the headline results obtained by fitting jointly the NICER and XMM-Newton data. To test the robustness of the analysis, the corresponding inference results using a synthetic data set are presented in Appendix A. In what follows we focus primarily on the constraints on radius since the posterior on the mass is essentially unchanged from the highly informative radio prior.

3.1. NICER-only Fit

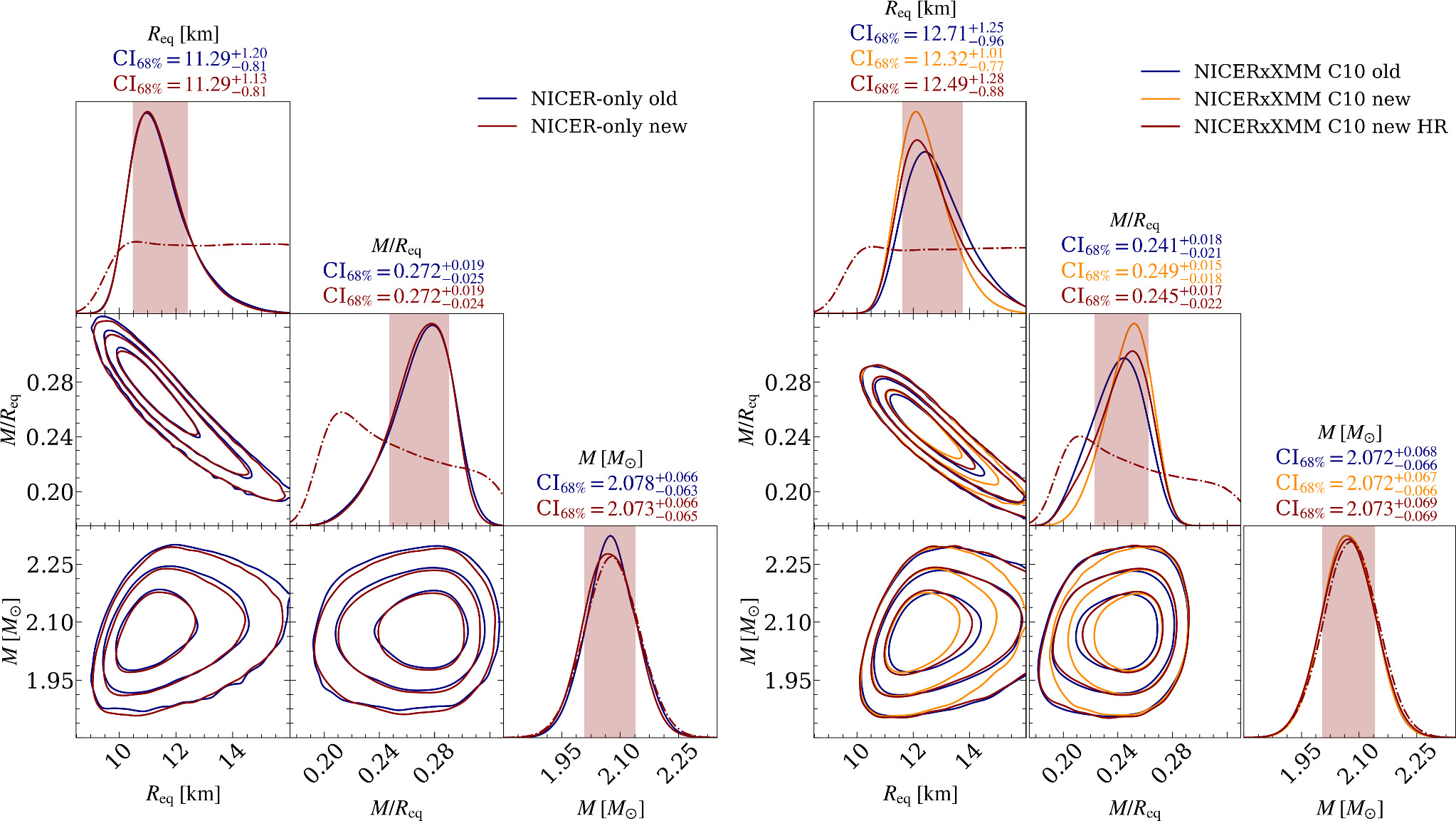

When analyzing only the new NICER data set (described in Section 2.1), we find better constraints for some of the model parameters, but not for all of them. As seen in the left panel of Figure 2, no significant improvement in radius constraints is found compared to the old results from R21 (where  km). These old results used a larger effective-area uncertainty and applied importance sampling instead of directly sampling with the most updated mass, distance, and inclination priors.

17

For the new data, the inferred radius is

km). These old results used a larger effective-area uncertainty and applied importance sampling instead of directly sampling with the most updated mass, distance, and inclination priors.

17

For the new data, the inferred radius is  km and mass is

km and mass is  M⊙. However, a few of the other parameters, e.g., the sizes and temperatures of the emitting regions, are better constrained with the new data, as seen in the online images of Figure 3.

M⊙. However, a few of the other parameters, e.g., the sizes and temperatures of the emitting regions, are better constrained with the new data, as seen in the online images of Figure 3.

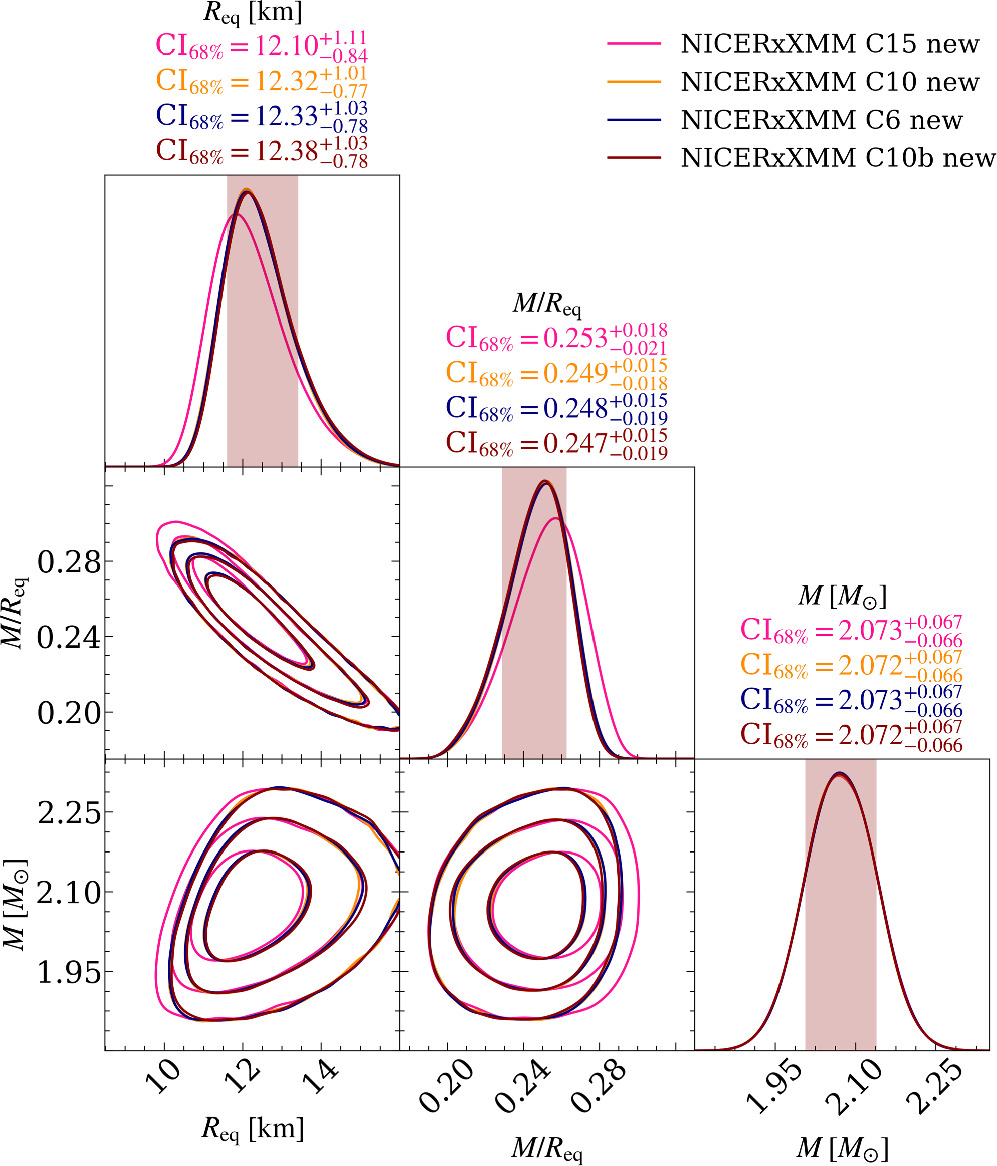

Figure 2. Radius, compactness, and mass posterior distributions using the new NICER data set and ST-U model in the NICER-only analysis (left panel) and in the joint NICER and XMM-Newton analysis (right panel) compared to the old results from R21. Here “C10” refers to a ±10.4% calibration uncertainty in the overall effective-area scaling factors, “new” and “old” without qualification have SE = 0.1 and “HR” refers to the new headline results with SE = 0.01. Dash–dotted functions represent the marginal prior probability density functions (PDFs). The shaded vertical bands show the 68.3% credible intervals (for the posteriors corresponding to the red curves), and the contours in the off-diagonal panels show the 68.3%, 95.4%, and 99.7% credible regions. See the captions of Figure 5 of S22 and Figure 5 of R21 for additional details about the figure elements.

Download figure:

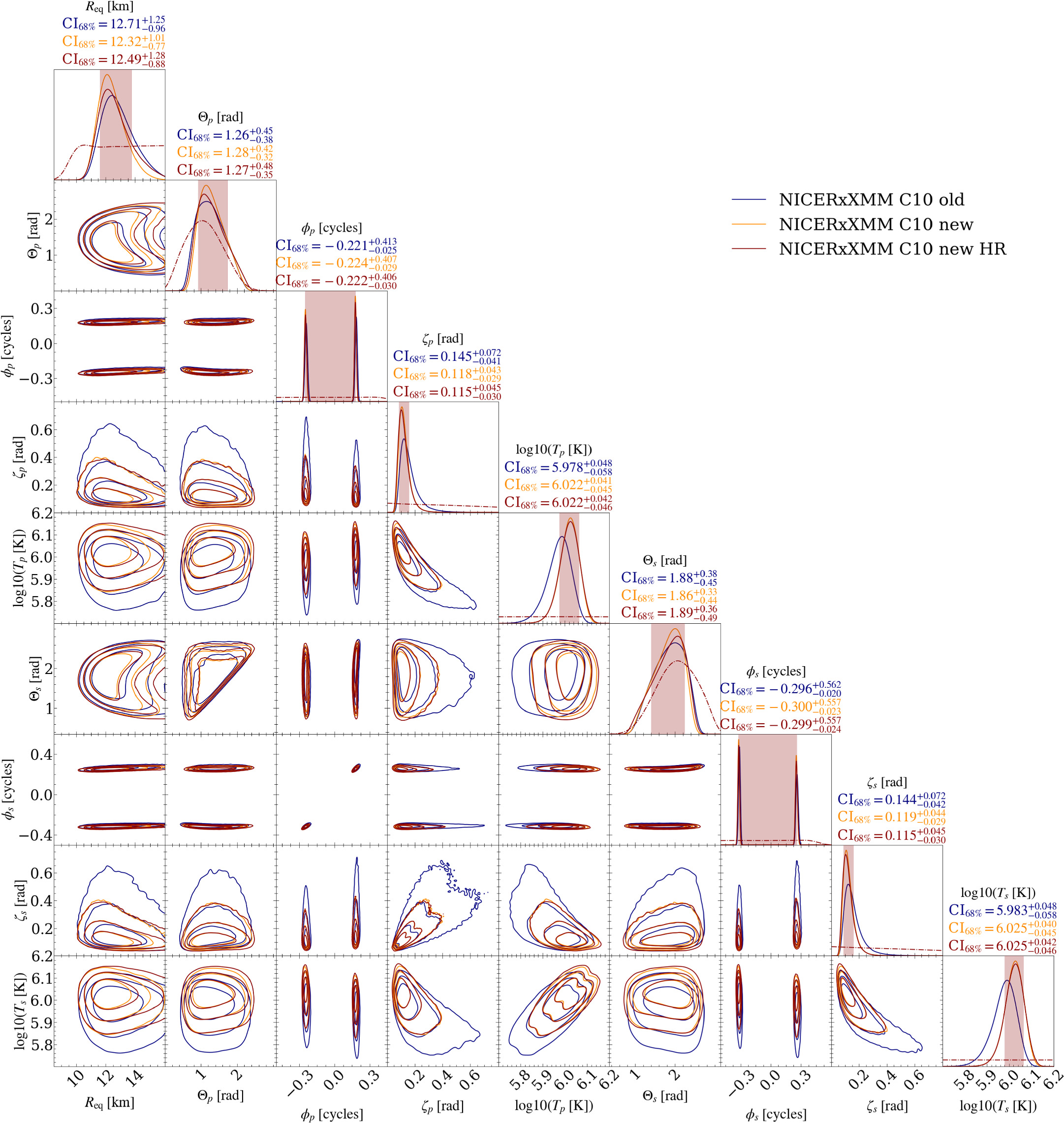

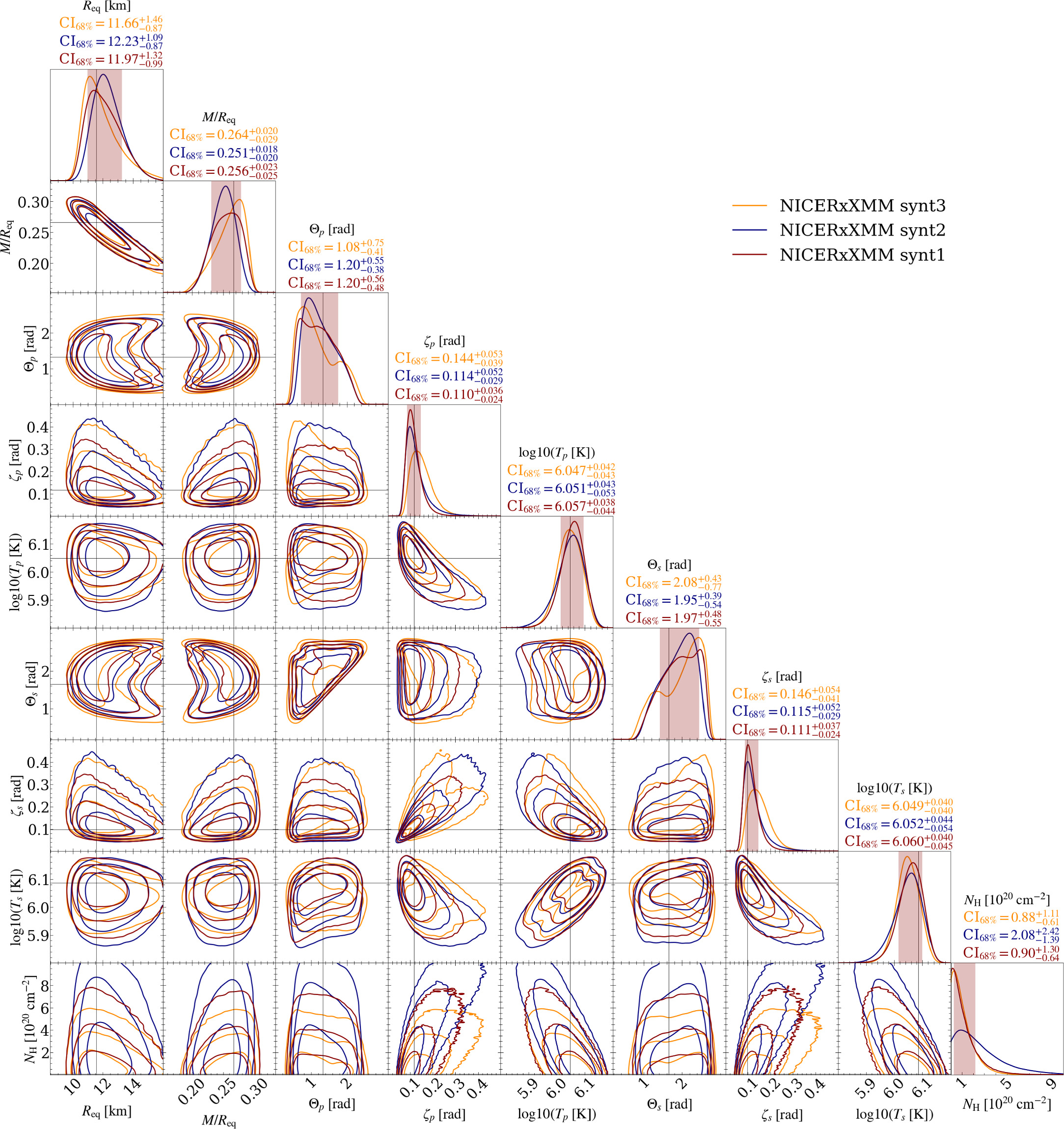

Standard image High-resolution imageFigure 3.

Posterior distributions for the hot region parameters using the new NICER data set and ST-U model in the joint NICER and XMM-Newton analysis compared to the old results from R21. See the caption of Figure 2 for more details about the figure elements. The complete figure set includes the posterior distributions for the remaining parameters (both for the NICER-only and the joint NICER and XMM-Newton analyses). (The complete figure set (4 images) is available.)

Download figure:

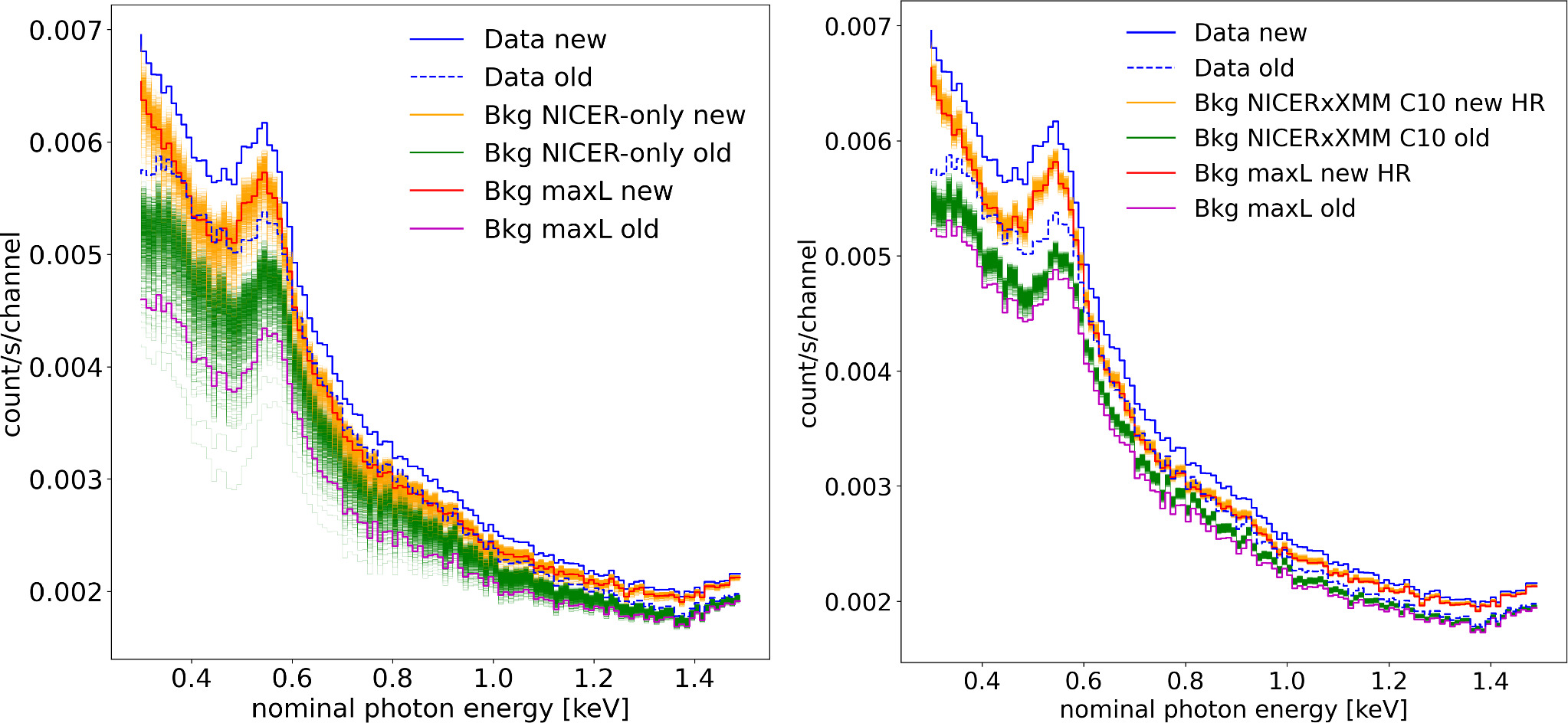

Standard image High-resolution imageThe inferred background is also more tightly constrained and the source versus total count-rate ratio is significantly smaller for the new data (about 5%–10%, in contrast to the previous 5%–20%) as seen in the left panel of Figure 4. This could explain why the radius constraints do not get tighter (see the discussion in Section 4). In particular, the background corresponding to the maximum likelihood sample is larger for the new data (source versus total ratio is about 7% instead of 17%). However, we note that with the old data most of the equally weighted posterior samples (and also the maximum posterior sample) have larger backgrounds than the maximum likelihood sample, which is shown in Figure 4 where the magenta curve is below most of the green curves (and the maximum posterior source versus total ratio is as small as 5%). In contrast, the maximum likelihood sample for the new data has a background that is close to the average and maximum posterior samples.

Figure 4. Comparison of the inferred NICER background for different data sets and analyses. Left panel: the blue solid and dashed stepped curves show the total NICER count-rate spectra for the new and old data sets, respectively (note that the count rate has slightly changed because of the different filtering described in Section 2.1). The orange and green stepped curves show the background curves that maximize the likelihood for 1000 equally weighted posterior samples in the NICER-only analysis with the new and old data, respectively. Accordingly, the red and magenta stepped curves show the background curves corresponding to the maximum likelihood sample for each run. Right panel: same as the left panel, except the inferred backgrounds are now shown for the joint NICER and XMM-Newton runs with the new and old data, respectively (new results are shown for the HR run, but an almost identical background is inferred when using the old sampler settings).

Download figure:

Standard image High-resolution image3.2. NICER and XMM-Newton Fit

Analyzing the new NICER data jointly with the XMM-Newton data (right panel of Figure 2), we find an inferred radius of  km for our headline results (“new HR” in the figure, see Section 2.4). For comparison we show the radius constraints obtained in R21 with comparable cross-calibration uncertainty (“C10 old” here, Figure 14 of R21

18

),

km for our headline results (“new HR” in the figure, see Section 2.4). For comparison we show the radius constraints obtained in R21 with comparable cross-calibration uncertainty (“C10 old” here, Figure 14 of R21

18

),  km, and the constraints we obtained for the new data but with the same sampler settings as in the older analysis (SE = 0.1),

km, and the constraints we obtained for the new data but with the same sampler settings as in the older analysis (SE = 0.1),  km. The new radius interval is thus about ∼20% tighter (and shifted to smaller values) than the old when using the same sampler settings, but roughly equally tight when using the new settings.

km. The new radius interval is thus about ∼20% tighter (and shifted to smaller values) than the old when using the same sampler settings, but roughly equally tight when using the new settings.

The lower limit on the 68% credible interval for the radius appears less sensitive to the sampler settings than the upper limit. Investigation of the likelihood surface reveals why this is the case. The likelihood falls off sharply at the lowest radii; for very small stars it is simply not possible to meet both the background and pulsed amplitude constraints given the tight geometric prior on the observer inclination for this source (Fonseca et al. 2021). However the likelihood surface for radii above the maximum at ∼11 km is very flat, making it harder to constrain the upper limit. In addition, as one approaches the highest radii, there are more solutions with hot spots that are smaller, hotter, and closer to the poles (see Figure 3). The likelihood surface in the space of spot size and temperature has a sharp point in this region of parameter space, and it is therefore important to resolve it well. In moving from SE = 0.1 to SE = 0.01 we observed that this region was sampled more extensively, and it appears to be this change that leads to the increase in the upper limit of the radius credible interval as SE gets smaller. The computational cost for the smaller SE, however, is much higher, and further increases in live points or reductions in SE to check convergence of the credible interval were not feasible.

To check whether we had now mapped the likelihood surface in spot size–temperature space sufficiently well to determine exactly how the likelihood falls off, we performed an additional high-resolution (40,000 live points, SE = 0.01) run, but restricting the prior on the secondary spot temperature to log10

Ts

[K] > 6.15. The sharp end of the likelihood surface was much more thoroughly sampled, with the drop-off in likelihood now well characterized. The inferred radius for the restricted prior run is  km, but with overall maximum likelihoods lower than those in the full prior run. This suggests that any further increase of the upper limit of the radius credible interval in the full prior run (using more computational resources) is unlikely to be more than ∼0.1 km. We take this as an estimate for the systematic error in the full prior run.

km, but with overall maximum likelihoods lower than those in the full prior run. This suggests that any further increase of the upper limit of the radius credible interval in the full prior run (using more computational resources) is unlikely to be more than ∼0.1 km. We take this as an estimate for the systematic error in the full prior run.

As in R21 and S22, the inferred radius for the joint NICER and XMM-Newton case is larger than for the NICER-only case. However, this time the median values from the joint analyses are slightly closer to the NICER-only result. The new radius results are also slightly more constrained than in S22, where the inferred radius was  km using the 3C50-filtered NICER data set (with a lower background limit) and XMM-Newton data. The inferred values for all of the parameters for the HR run are shown in Table 1, and the remaining posterior distributions are shown in Figure 3. From there we see that, compared to the old R21 results, consistent but slightly tighter constraints are obtained for hot region temperatures and sizes. The credible intervals for these parameters do not seem to depend significantly on the sampler settings used. Even though the new data are more restrictive, we find that the ST-U model employed can still reproduce the data well, as seen from the residuals in Figure 5.

km using the 3C50-filtered NICER data set (with a lower background limit) and XMM-Newton data. The inferred values for all of the parameters for the HR run are shown in Table 1, and the remaining posterior distributions are shown in Figure 3. From there we see that, compared to the old R21 results, consistent but slightly tighter constraints are obtained for hot region temperatures and sizes. The credible intervals for these parameters do not seem to depend significantly on the sampler settings used. Even though the new data are more restrictive, we find that the ST-U model employed can still reproduce the data well, as seen from the residuals in Figure 5.

Figure 5. The new NICER count data, posterior-expected count numbers (averaged from 200 equally weighted posterior samples), and (Poisson) residuals for the ST-U model in the joint NICER and XMM-Newton analysis (for the HR run). See Figure 6 of R21 for additional details about the figure elements.

Download figure:

Standard image High-resolution imageTable 1. Summary Table for the New Joint NICER and XMM-Newton Results

| Parameter | Description | Prior PDF (Density and Support) |

|

|

|

|---|---|---|---|---|---|

| P [ms] | coordinate spin period | P = 2.8857, fixed | ⋯ | ⋯ | ⋯ |

| M [M⊙] | gravitational mass |

|

| 0.01 | 2.005 |

| cosine Earth inclination to spin axis |

|

| 0.00 | 0.040 |

| with joint prior PDF N( μ ⋆, Σ⋆) | μ ⋆ = [2.082, 0.0427]⊤ | ||||

![${{\boldsymbol{\Sigma }}}^{\star }=\left[\begin{array}{ll}{0.0703}^{2} & {0.0131}^{2}\\ {0.0131}^{2} & {0.00304}^{2}\end{array}\right]$](https://content.cld.iop.org/journals/0004-637X/974/2/294/revision1/apjad5f1fieqn25.gif)

| |||||

| Req [km] | coordinate equatorial radius | Req ∼ U(3rg(1), 16) |

| 0.66 | 11.24 |

| with compactness condition | Rpolar/rg(M) > 3 | ||||

| with effective gravity condition |

, ∀θ , ∀θ

| ||||

| Θp [radians] | p region center colatitude |

|

| 0.32 | 1.28 |

| Θs [radians] | s region center colatitude |

|

| 0.29 | 1.70 |

| ϕp [cycles] | p region initial phase | ϕp ∼ U(−0.5, 0.5), wrapped | bimodal | 3.72 | −0.255 |

| ϕs [cycles] | s region initial phase | ϕs ∼ U(−0.5, 0.5), wrapped | bimodal | 3.68 | −0.323 |

| ζp [radians] | p region angular radius | ζp ∼ U(0, π/2) |

| 2.72 | 0.151 |

| ζs [radians] | s region angular radius | ζs ∼ U(0, π/2) |

| 2.70 | 0.124 |

| no region-exchange degeneracy | Θs ≥ Θp | ||||

| nonoverlapping hot regions | function of (Θp , Θs , ϕp , ϕs , ζp , ζs ) | ||||

![${\mathrm{log}}_{10}\left({T}_{p}\ [{\rm{K}}]\right)$](https://content.cld.iop.org/journals/0004-637X/974/2/294/revision1/apjad5f1fieqn34.gif)

| p region NSX effective temperature |

, NSXlimits , NSXlimits |

| 3.20 | 6.054 |

![${\mathrm{log}}_{10}\left({T}_{s}\ [{\rm{K}}]\right)$](https://content.cld.iop.org/journals/0004-637X/974/2/294/revision1/apjad5f1fieqn37.gif)

| s region NSX effective temperature |

, NSXlimits , NSXlimits |

| 3.23 | 6.093 |

| D [kpc] | Earth distance | D ∼ skewnorm(1.7, 1.0, 0.23) |

| 0.07 | 1.57 |

| NH [1020 cm−2] | interstellar neutral H column density | NH ∼ U(0, 10) |

| 1.33 | 0.25 |

| αNICER | NICER effective-area scaling | αNICER, αXMM ∼ N( μ , Σ) |

| 0.00 | 1.00 |

| αXMM | XMM-Newton effective-area scaling | αNICER, αXMM ∼ N( μ , Σ) |

| 0.02 | 0.89 |

| with joint prior PDF N( μ , Σ) | μ = [1.0, 1.0]⊤ | ||||

![${\boldsymbol{\Sigma }}=\left[\begin{array}{ll}{0.104}^{2} & {0.100}^{2}\\ {0.100}^{2} & {0.104}^{2}\end{array}\right]$](https://content.cld.iop.org/journals/0004-637X/974/2/294/revision1/apjad5f1fieqn44.gif)

| |||||

| Sampling process information | |||||

| number of free parameters: 15 | |||||

| number of processes (multimodes): 1 | |||||

| number of live points: 4 × 104 | |||||

| SE: a 0.01 | |||||

| termination condition: 0.1 | |||||

evidence:

| |||||

| number of core hours: 84,348 | |||||

| likelihood evaluations: 66,823,871 | |||||

Notes. We show the prior PDFs, 68.3% credible intervals around the median  , Kullback–Leibler divergence DKL in bits representing the prior-to-posterior information gain, and the maximum likelihood nested sample

, Kullback–Leibler divergence DKL in bits representing the prior-to-posterior information gain, and the maximum likelihood nested sample  for all the parameters. Parameters for the primary hot region are denoted with a subscript p and the parameters for the secondary hot region with a subscript s. Note that the zero phase definition for ϕp

and ϕs

differs by 0.5 cycles. Additional details of the parameter descriptions, the prior PDFs, and the sampling process information are given in the notes of Table 1 in R21.

for all the parameters. Parameters for the primary hot region are denoted with a subscript p and the parameters for the secondary hot region with a subscript s. Note that the zero phase definition for ϕp

and ϕs

differs by 0.5 cycles. Additional details of the parameter descriptions, the prior PDFs, and the sampling process information are given in the notes of Table 1 in R21.

Download table as: ASCIITypeset image

Just as for the NICER-only case (in Section 3.1), the new inferred best-fitting NICER background versus source count ratio changes compared to the previous analysis (see the right panel of Figure 4). However the change is smaller for the joint NICER and XMM-Newton analysis (regardless of the sampler settings); the maximum likelihood source count rate versus total count rate is now around 5.5% instead of the previous 8.5%. When looking at the bulk of the equally weighted posterior samples, the difference is even smaller; in both cases the range of the source count rate versus the total count rate is around 4%–7%. The smaller change is entirely to be expected, since XMM-Newton acts indirectly as a constraint on the NICER background and already did so in the older analysis.

We also found (for exploratory analysis using SE = 0.1) that different assumptions for the cross-calibration scaling factors do not significantly affect the new results. This is shown in Figure 6. We see that the median radius only increases from around 12.1 to around 12.3 km when applying a ±10.4% instead of a ±15% uncertainty in the overall scaling factors. However, applying a ±5.8% uncertainty 19 produces almost identical results to those of ±10.4%. We also explored the effect of a different choice for the energy channels included in the analysis. As shown in Figure 6, we found no significant difference in the inferred radius when using the channel choices of M21 instead of those reported in Section 2.1 (i.e., NICER channels only up to 123 instead of 149 and XMM-Newton pn channels up to 299 instead of 298).

Figure 6. Posterior distributions for the spacetime parameters using the new NICER data set and ST-U model in the joint NICER and XMM-Newton analyses, with different assumptions for the effective-area scaling uncertainty and the used energy channels. Here “C15,” “C10,” and “C6” refer to runs with ±15%, ±10.4%, and ±5.8% uncertainties in the overall effective-area scaling factors, respectively, and “C10b” to a run with a ±10.4% uncertainty and an alternative channel choice (see the text at the end of Section 3.2). The contours for the three latter cases are almost exactly overlapping. See the caption of Figure 2 for additional details about the figure elements.

Download figure:

Standard image High-resolution image4. Discussion

4.1. Updated PSR J0740+6620 Parameters and Comparison to Older Results

Our new inferred mass and radius for PSR J0740+6620, using NICER data from 2018 September 21 to 2022 April 21 and joint modeling with XMM-Newton, are M =2.073 ± 0.069 M⊙ (largely unchanged from the prior) and  km (68% credible intervals). The 90% credible interval for the radius is

km (68% credible intervals). The 90% credible interval for the radius is  km and the 95% credible interval is

km and the 95% credible interval is  km. The results continue to disfavor a very soft EOS, with R < 10.96 km excluded at the 97.5% level and R < 11.15 km excluded at the 95% level (the X% credible interval runs from the (50 − X/2)% to the (50 + X/2)% quantile). These lower limits are more constraining than the corresponding headline values reported in R21 (R < 10.71 km excluded at the 97.5% level and R < 10.89 km excluded at the 95% level). Taken together with the fact that gravitational-wave observations favor smaller stars for intermediate-mass (∼1.4 M⊙) NSs (Abbott et al. 2018, 2019) the two measurement techniques provide tight and complementary bounding constraints on EOS models. Tighter lower limits on the radius of such a high-mass pulsar should be more informative regarding, for example, the possible presence of quark matter in NS cores (see, e.g., Annala et al. 2022).

km. The results continue to disfavor a very soft EOS, with R < 10.96 km excluded at the 97.5% level and R < 11.15 km excluded at the 95% level (the X% credible interval runs from the (50 − X/2)% to the (50 + X/2)% quantile). These lower limits are more constraining than the corresponding headline values reported in R21 (R < 10.71 km excluded at the 97.5% level and R < 10.89 km excluded at the 95% level). Taken together with the fact that gravitational-wave observations favor smaller stars for intermediate-mass (∼1.4 M⊙) NSs (Abbott et al. 2018, 2019) the two measurement techniques provide tight and complementary bounding constraints on EOS models. Tighter lower limits on the radius of such a high-mass pulsar should be more informative regarding, for example, the possible presence of quark matter in NS cores (see, e.g., Annala et al. 2022).

Comparison to the older results is complicated by the fact that changes have been made to both the data set and the analysis method. The old headline results from R21, with a radius of  km, were obtained using a larger effective-area scaling uncertainty than in this paper. Using compressed effective-area scaling uncertainties (as used in this paper), and importance sampling the original results with a new prior, R21 reported

km, were obtained using a larger effective-area scaling uncertainty than in this paper. Using compressed effective-area scaling uncertainties (as used in this paper), and importance sampling the original results with a new prior, R21 reported  km as the radius. However, in this paper we also use more extensive sampler settings than before (see Section 2.4), and this also contributes to the differences in the new reported headline values. The lower limit for the radius credible intervals seems largely insensitive to the sampler settings, whereas the upper limit is more sensitive due—in large part—to flatness of the likelihood surface at high radii. While we are not able to formally prove convergence of our inferred radius we have investigated the factors driving the upper limit of the credible interval, and on the basis of the analyses carried out do not expect substantial further broadening (for details see Section 3.2). We note that Dittmann et al. (2024) obtain an ∼0.5 km higher upper limit for the radius (when limiting the radius to be below 16 km as we do); possible reasons for this are discussed in Section 4.3.

km as the radius. However, in this paper we also use more extensive sampler settings than before (see Section 2.4), and this also contributes to the differences in the new reported headline values. The lower limit for the radius credible intervals seems largely insensitive to the sampler settings, whereas the upper limit is more sensitive due—in large part—to flatness of the likelihood surface at high radii. While we are not able to formally prove convergence of our inferred radius we have investigated the factors driving the upper limit of the credible interval, and on the basis of the analyses carried out do not expect substantial further broadening (for details see Section 3.2). We note that Dittmann et al. (2024) obtain an ∼0.5 km higher upper limit for the radius (when limiting the radius to be below 16 km as we do); possible reasons for this are discussed in Section 4.3.

Inspecting the posterior distributions in Figure 3, one can see that most of the large-radius solutions (explored better by the HR run) are on average connected to smaller and hotter regions closer to the rotational poles. The pointy ends of the curved posteriors seen in the radius–colatitude and spot size–temperature planes can be challenging for samplers to explore, hence our use in this paper of more extensive MultiNest settings and targeted (restricted prior) runs to explore this space. Moving from SE = 0.1 to SE = 0.01 resulted in more extensive sampling of this region likely causing the broadening of the radius credible interval. Similar slow broadening has not yet been encountered in X-PSI analyses for other NICER sources, which are brighter, but it is clearly something to be alert to (although sensitivity to the sampler settings was also seen in the analysis of PSR J0030+0451 in Vinciguerra et al. 2024). Additional independent prior constraints on hot spot properties that might cut down the range of possibilities would be extremely helpful for PSR J0740+6620.

Some of the model parameters are constrained more tightly with the new NICER data set (while still consistent with the old results) in both the NICER-only and the joint NICER and XMM-Newton analyses (mostly regardless of the sampler settings). In particular, the angular radii of the hot regions are now constrained around 35% more tightly, and temperatures around 20% more tightly. The inferred regions are on average slightly smaller and hotter than before. In addition, the interstellar hydrogen column density is constrained at least 20% more tightly toward the lower end of the prior. The credible intervals for the hot region colatitudes also become narrower by 10%–15%, but only when using the same sampler settings. For the final headline joint NICER and XMM-Newton analysis the colatitude constraints do not significantly differ from the old results. The offset angle from an exactly antipodal hot region configuration is inferred to be  , which is similar to the value of

, which is similar to the value of  inferred from the old analysis. Thus, in both cases the offset angle is above ∼25° with 84% probability (which is the same as reported in S22 using the 3C50 data set). This implies a likely deviation from a centered-dipolar magnetic field.

inferred from the old analysis. Thus, in both cases the offset angle is above ∼25° with 84% probability (which is the same as reported in S22 using the 3C50 data set). This implies a likely deviation from a centered-dipolar magnetic field.

4.2. Scaling of Credible Intervals with the Source-to-background Count Ratio

As shown in Section 3, for identical sampler settings, we found that the constraints for the NS radius are roughly 20% tighter when using the new NICER data set combined with the original XMM-Newton data. However, when analyzing only the NICER data, the radius constraints remained essentially unchanged. This can be attributed to the differences in the inferred ratio between source and background photons.

This ratio is expected to influence the radius credible interval as outlined, for example, in Equation (4) of Psaltis et al. (2014). This expression is obtained by assuming simplified model properties, e.g., considering small hot regions with isotropic emission (see also Poutanen & Beloborodov 2006), and assuming no uncertainty in the fundamental amplitude, background, and geometry parameters. Under these assumptions the uncertainty in the radius should scale as

where S is the number of source counts, N = S + B is the number of total counts, and B is the number of background counts. Although this relation is extremely simplified, we can use it to make a rough comparison to the observed radius credible interval scaling. Here we only compare runs performed with the same sampler settings (to avoid their influence).

We found that the fraction of inferred background photons increases with the new data set, especially in the case of the NICER-only analysis (likely due to the different filtering of the data). The expected number of source counts is even smaller for the new data than for the old data in a large fraction of samples; for the maximum likelihood sample in particular it is 20% smaller. The old data allowed a large variety of different background versus source count solutions (see the green stepped curve in the left panel of Figure 4), but for the new data the background is better constrained and higher on average (in comparison to the source counts). Thus, it is not trivial to predict how the radius credible intervals should change when increasing the number of observed counts.

For the joint NICER and XMM-Newton analysis, S/N is better constrained, and does not change drastically when using the new NICER data, as mentioned in Section 3.2. The inferred S for most samples in the joint analysis is higher with the new data, unlike for the NICER-only analysis. However, even in this case, accounting for the uncertainties in the inferred NICER backgrounds would allow a significant variation in the predicted scaling for the radius credible intervals. Using S and S/N values based on one standard deviation limits of the inferred backgrounds, 20 Equation (2) predicts that credible intervals can become anything from 10% broader to 40% tighter. 21 The observed 20% tightening is consistent with this.

Based on our results, it is not trivial to predict how the radius credible intervals evolve with more photons, at least in the presence of a large background and a large associated uncertainty. Promisingly though, the inferred ratio between the source and background photons seems to be better constrained with the new data set. Thus, once this ratio is stable enough, the radius intervals are expected to tighten when increasing the total number of photons, as seen already in our joint NICER and XMM-Newton analysis. Achieving a small ∼±5% uncertainty for the PSR J0740+6620 radius would still likely require increasing the current exposure time with NICER to at least 8 Ms, based on the new headline results and the  dependence on the number of counts.

dependence on the number of counts.

4.3. Comparison to the Results of Dittmann et al. (2024)

Using the same NICER and XMM-Newton data sets as in this paper, an independent analysis carried out by Dittmann et al. (2024, hereafter D24) reports the PSR J0740+6620 radius to be  km. This is broadly consistent with the

km. This is broadly consistent with the  km reported in this paper. Although the radius credible interval of D24 is about 50% broader than here, the difference is notably smaller than the ∼80% difference between the headline results reported by M21 and R21 (the former using the same code as D24, the latter using X-PSI). In particular, the radius lower limit—which as discussed previously is easier to constrain—is very similar for both, as was already the case in the 3C50 analyses of S22.

km reported in this paper. Although the radius credible interval of D24 is about 50% broader than here, the difference is notably smaller than the ∼80% difference between the headline results reported by M21 and R21 (the former using the same code as D24, the latter using X-PSI). In particular, the radius lower limit—which as discussed previously is easier to constrain—is very similar for both, as was already the case in the 3C50 analyses of S22.

The remaining differences between the results of this paper and D24 could be related to different prior choices and/or different sampling procedures.

22

When D24 require the radius to be less than 16 km (the prior upper limit used in our analysis), they get  km. In addition, D24 use a hybrid nested sampling (MultiNest) and ensemble Markov Chain Monte Carlo (emcee; Foreman-Mackey et al. 2013) scheme, where a significant amount of the time can be spent in the ensemble sampling phase. When MultiNest is used, our resolution settings are higher in terms of the live points for runs reported in this paper. However, MultiNest-only test comparisons were made using similar settings; when both teams use 4096 live points and SE = 0.01 the results are

km. In addition, D24 use a hybrid nested sampling (MultiNest) and ensemble Markov Chain Monte Carlo (emcee; Foreman-Mackey et al. 2013) scheme, where a significant amount of the time can be spent in the ensemble sampling phase. When MultiNest is used, our resolution settings are higher in terms of the live points for runs reported in this paper. However, MultiNest-only test comparisons were made using similar settings; when both teams use 4096 live points and SE = 0.01 the results are  km for D24 and

km for D24 and  km using X-PSI. Removing the samples with radius above 16 km the D24 result becomes

km using X-PSI. Removing the samples with radius above 16 km the D24 result becomes  km. As discussed in Appendix B however, the effect of SE is connected to the initial prior volume and may thus not be directly comparable to D24 who assume larger priors in many of the parameters (see M21 and R21 for details). If we use the same number of live points (4096) as D24 but drop the X-PSI SE to 0.0001 we get a radius of

km. As discussed in Appendix B however, the effect of SE is connected to the initial prior volume and may thus not be directly comparable to D24 who assume larger priors in many of the parameters (see M21 and R21 for details). If we use the same number of live points (4096) as D24 but drop the X-PSI SE to 0.0001 we get a radius of  km, very close to the radius-limited D24

MultiNest-only result.

23

km, very close to the radius-limited D24

MultiNest-only result.

23

In summary, the MultiNest-only results of this paper and D24 match fairly well when using the same radius prior upper limit and adjusting the sampler settings. Thus, the variation between the headline results may be related to different prior choices, to the differences between the MultiNest and emcee samplers (Ashton et al. 2019), and to the possible lack of convergence due to imperfect sampler settings.

5. Conclusions

We have used a new NICER data set, with about 1.13 Ms additional exposure time, to reanalyze the pulse profiles of PSR J0740+6620. Analyzing the data jointly with the same XMM-Newton data as in R21, we infer the NS radius to be  km, bounded by the 16% and 84% quantiles. This interval is consistent with the previous result and roughly equally wide. It was obtained with enhanced sampler settings, which were found to increase the radius upper limit compared to the result if using the old settings. Even though we could not conclusively prove convergence of the upper limit, we consider the new results more robust after an extensive exploration of the likelihood surface and estimate the remaining systematic error to be ≲0.1 km. In addition, we found an expected parameter recovery in the analysis of simulated data resembling the new PSR J0740+6620 observation (see Appendix A). The radius lower limit is now also slightly more constraining than before; we exclude R < 11.15 km at 95% probability for this pulsar, and therefore the softest EOSs.

km, bounded by the 16% and 84% quantiles. This interval is consistent with the previous result and roughly equally wide. It was obtained with enhanced sampler settings, which were found to increase the radius upper limit compared to the result if using the old settings. Even though we could not conclusively prove convergence of the upper limit, we consider the new results more robust after an extensive exploration of the likelihood surface and estimate the remaining systematic error to be ≲0.1 km. In addition, we found an expected parameter recovery in the analysis of simulated data resembling the new PSR J0740+6620 observation (see Appendix A). The radius lower limit is now also slightly more constraining than before; we exclude R < 11.15 km at 95% probability for this pulsar, and therefore the softest EOSs.

Acknowledgments

This work was supported in part by NASA through the NICER mission and the Astrophysics Explorers Program. T.S., D.C., Y.K., S.V., and A.L.W. acknowledge support from ERC Consolidator grant No. 865768 AEONS (PI: Watts). The use of the national computer facilities in this research was subsidized by NWO Domain Science. In addition, this work used the Dutch national e-infrastructure with the support of the SURF Cooperative using grant No. EINF-4664. Part of the work was carried out on the HELIOS cluster including dedicated nodes funded via the abovementioned ERC CoG. Astrophysics research at the Naval Research Laboratory is supported by the NASA Astrophysics Explorer Program. S.G. acknowledges the support of the Centre National d’Etudes Spatiales (CNES). W.C.G.H. acknowledges support through grant 80NSSC23K0078 from NASA. S.M. acknowledges support from NSERC Discovery grant RGPIN-2019-06077. This research has made use of data products and software provided by the High Energy Astrophysics Science Archive Research Center (HEASARC), which is a service of the Astrophysics Science Division at NASA/GSFC and the High Energy Astrophysics Division of the Smithsonian Astrophysical Observatory. We would also like to thank Will Handley, Johannes Buchner, Jason Farquhar, Cole Miller, and Alexander Dittmann for useful discussions.

Facilities: NICER - , XMM - Newton X-Ray Multimirror Mission satellite

Software: Cython (Behnel et al. 2011), GetDist (Lewis 2019), GNU Scientific Library (Galassi et al. 2009), HEASoft (NASA High Energy Astrophysics Science Archive Research Center (Heasarc), 2014), Matplotlib (Hunter 2007), MPI for Python (Dalcín et al. 2008), MultiNest (Feroz et al. 2009), nestcheck (Higson 2018), NumPy (van der Walt et al. 2011), PyMultiNest (Buchner et al. 2014), Python/C language (Oliphant 2007), SciPy (Virtanen et al. 2020), and X-PSI (Riley et al. 2023).

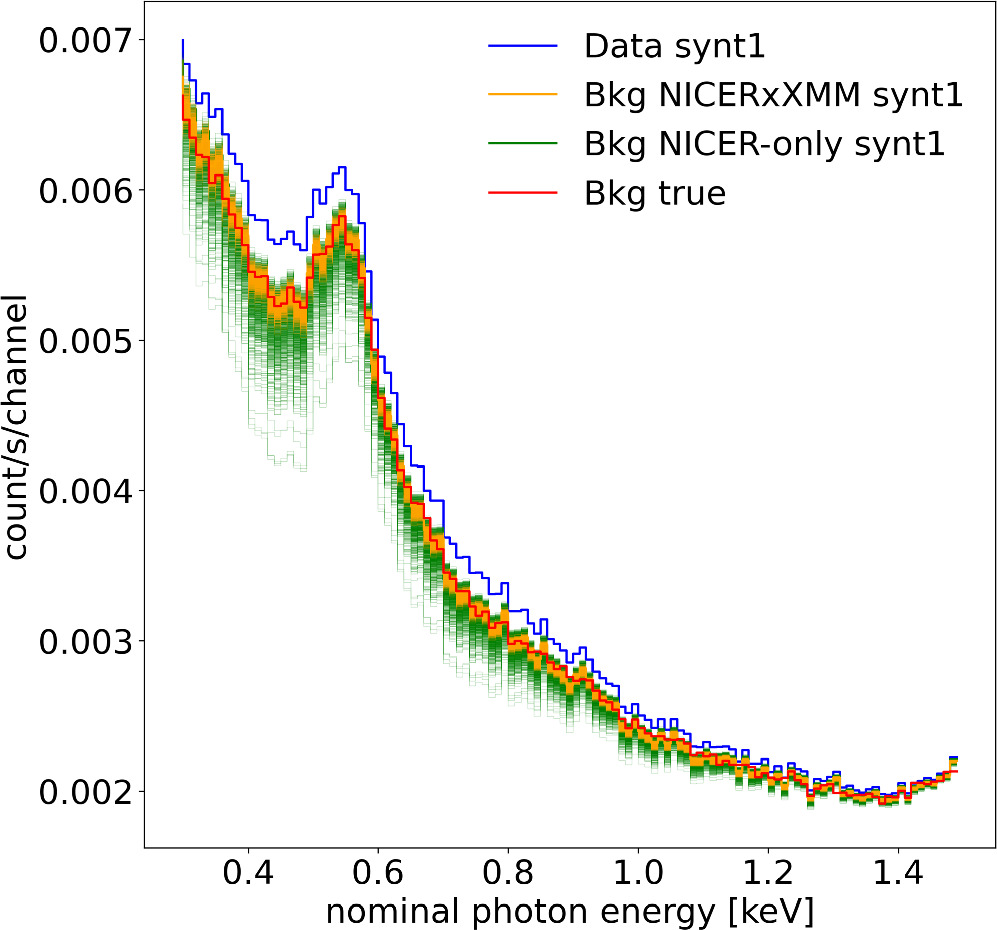

Appendix A: Simulations

To test the robustness of the analyses of this paper, we performed several inference runs using synthetic data. We generated three different noise realizations for both the synthetic NICER and XMM-Newton data using the maximum likelihood parameter vector found in the initial new joint NICER and XMM-Newton analysis with real data (the run using the same sampler settings as in R21). The parameters are shown in Table 2. The input background spectrum for each instrument was set to be the one that maximized the instrument-specific likelihood for the real data (e.g., for NICER the red curve in Figure 7). For each synthetic data set, Poisson fluctuations were added to the sum of counts from the hot spots and the background using a different seed for the random number generator. The exposure times were matched to those of the true observations.

Figure 7.

Comparison of the inferred NICER background for the synthetic data set “synt1” based on either the NICER-only or joint NICER and XMM-Newton analysis. The blue stepped curve shows the synthetic data. The orange stepped curves show background curves that maximize the likelihood for 1000 equally weighted posterior samples from the joint NICER and XMM-Newton run. The green stepped curves (partly hidden by the orange) show the same for samples from the NICER-only run. The red stepped curve shows the injected background for the synthetic data. The complete figure set includes the inferred backgrounds for two other synthetic data realizations. (The complete figure set (3 images) is available.)

Download figure:

Standard image High-resolution imageTable 2. Injected Model Parameters

| Parameter | Injected Value |

|---|---|

| M [M⊙] | 2.088 |

| Req [km] | 11.57 |

| Θp [radians] | 1.324 |

| Θs [radians] | 1.649 |

| ϕp [cycles] | −0.258 |

| ϕs [cycles] | −0.328 |

| ζp [radians] | 0.117 |

| ζs [radians] | 0.098 |

![${\mathrm{log}}_{10}\left({T}_{p}\ [{\rm{K}}]\right)$](https://content.cld.iop.org/journals/0004-637X/974/2/294/revision1/apjad5f1fieqn64.gif)

| 6.048 |

![${\mathrm{log}}_{10}\left({T}_{s}\ [{\rm{K}}]\right)$](https://content.cld.iop.org/journals/0004-637X/974/2/294/revision1/apjad5f1fieqn65.gif)

| 6.086 |

| 0.041 |

| D [kpc] | 1.187 |

| NH [1020 cm−2] | 0.067 |

| αNICER | 0.905 |

| αXMM | 0.811 |

Note. See the parameter descriptions in Table 1.

Download table as: ASCIITypeset image

For the inference runs, we applied the same prior distributions as in the analysis of the real data. For the MultiNest resolution settings we used the same choices as for the real data, but with SE = 0.1 to keep the computational cost manageable. We note that the credible interval for the radius and a couple of other parameters was larger for the headline results with SE = 0.01. However, the expected parameter recovery found from the simulations still indicates that at least the headline intervals are unlikely to be heavily underpredicted.

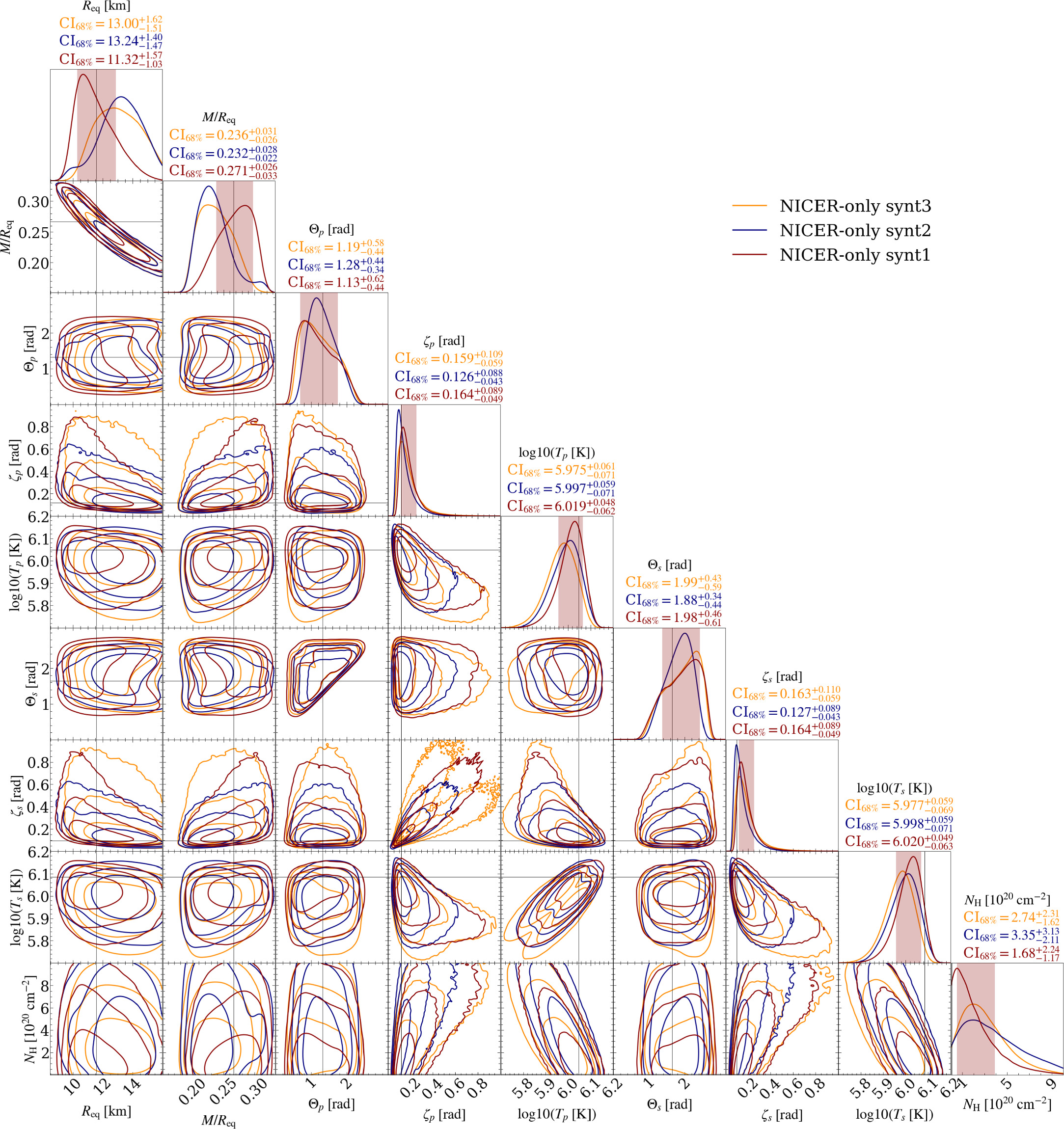

We performed six inference runs in total: three analyzing only NICER data (one for each noise realization) and three analyzing the NICER and XMM-Newton data jointly (one for each data pair with the same seed when creating the data). The results of these runs are shown in Figures 8 (NICER only) and 9 (joint NICER and XMM-Newton) for the most varying parameters (in contrast, the posteriors for the mass, inclination, and distance were always found to follow closely their priors). We see that the true radius, compactness, hot spot properties, and the hydrogen column density NH values are better recovered when jointly fitting NICER and XMM-Newton in all the three cases. However, even for the NICER-only analysis, the injected radius is found within the 68% credible limits in two out of three cases (the expectation being between one and three). 24 If accounting for all the sampled parameters in the NICER-only runs, the injected values are found within the 68% intervals in 67% of the cases (the expectation being between 62% and 76%). For the joint NICER and XMM-Newton analyses, all the injected radii, and 78% of all the injected parameters, are found inside the 68% interval (the expectations being the same as for the NICER-only case). The inferred background spectra were also found to resemble the true background for all the runs, although with less scatter in the case of the joint NICER and XMM-Newton runs, as seen in Figure 7.

Figure 8. Posterior distributions for the most run-to-run varying parameters using the synthetic NICER data sets and the ST-U model. The shaded vertical bands show the 68.3% credible intervals for the run with synthetic data labeled as “synt1.” The thin black lines represent the injected values. See the caption of Figure 2 for more details about the figure elements.

Download figure:

Standard image High-resolution imageFigure 9. Posterior distributions for the most run-to-run varying parameters using the synthetic NICER and XMM-Newton data sets and the ST-U model. The shaded vertical bands show the 68.3% credible intervals for the run with synthetic data labeled as “synt1.” The thin black lines represent the injected values. See the caption of Figure 2 for more details about the figure elements.

Download figure:

Standard image High-resolution imageWe can also see that the inferred credible intervals for the simulated data are a bit larger than for the corresponding true data (see Section 3 for the true data). In the case of the NICER-only analysis, the width of the 68% radius interval is around 2.5–3.1 km for all simulations, and about 1.9 km for the true data. In the case of the joint NICER and XMM-Newton analysis, the width of the same interval is around 1.9–2.4 km for the simulations, and about 1.8 km for the true data with the same sampler settings (but 2.2 km for the higher-resolution headline results). However, this difference is likely due to the small number of runs on the simulated data. We also performed two additional low-resolution NICER-only runs (with 4 × 103 live points instead of 4 × 104 used otherwise) to see how the credible intervals for synthetic data depend on the sampling resolution. We found 0.3–0.4 km wider 68% intervals for the radius when using the higher resolution, which is in accordance with what was found for the true data in R21.

In the current analysis, we did not test how the observed parameter recovery could change if selecting other injected values. More simulation tests with X-PSI were recently reported by Kini et al. (2023) and Vinciguerra et al. (2023) with different parameters, models, and instruments, showing the expected recovery when the data were created and fitted with the same model. Our results with the PSR J0740+6620-like simulated data support those findings but show also that analyzing many instruments jointly may improve the accuracy of the recovered values, given our assumptions of cross-calibration uncertainties.

Appendix B: Treatment of Sampling Efficiency in X-PSI

To clarify the terminology and treatment of the MultiNest SE parameter in X-PSI, we recap here the procedure described in Appendix B.5.3 of Riley (2019). As mentioned there (and in Footnote 16), the native SE setting is modified to account for the initial prior volume, which can differ from unity in our models unlike in the original MultiNest algorithm (see Algorithm 1 in Feroz et al. 2009). That algorithm uses a prior volume that is shrunk at each iteration so that the remaining expected prior volume is  , where i is the iteration number and N is the number of live points. This is used to set the minimum volume Vm of the approximate isolikelihood-bounding ellipsoid from which higher-likelihood points are drawn at each iteration. To avoid sampling from a too small volume (in case the ellipsoidal approximation is not accurate), the minimum volume is additionally enlarged to

, where i is the iteration number and N is the number of live points. This is used to set the minimum volume Vm of the approximate isolikelihood-bounding ellipsoid from which higher-likelihood points are drawn at each iteration. To avoid sampling from a too small volume (in case the ellipsoidal approximation is not accurate), the minimum volume is additionally enlarged to  , where e is the expansion factor and the inverse of SE. This means, in practice, that Vm shrinks exponentially at every iteration, but the initial value is now e instead of 1.

, where e is the expansion factor and the inverse of SE. This means, in practice, that Vm shrinks exponentially at every iteration, but the initial value is now e instead of 1.

In X-PSI analyses, however, the initial prior volume is usually less than 1 due to the rejection rules applied. For example, if the star is too compact, a likelihood value below the MultiNest log_zero threshold is returned so that the sample will be automatically ignored. Therefore, we set the shrinkage of Vm to start from He (instead of e), where H is the true estimated initial prior volume. This is done by setting the nominal input value of the SE parameter to 1/(He) in the code. When reporting SE values we still refer to 1/e, since that describes the enlargement relative to the true initial prior.

Footnotes

- 11

- 12

The versions are practically identical for the considered models; the only actual difference is the fix of a numerical ray-tracing issue since v0.7.12, affecting only a few parameter vectors with emission angles extremely close to 90° (https://github.com/xpsi-group/xpsi/issues/53). This is not expected to alter the inferred radius.

- 13

- 14

Defined as a product of ARF and RMF, which were created through the nicerarf and nicerrmf tools.

- 15

- 16

Note that this factor is not the nominal MultiNest SE parameter (SE′), since SE′ is modified in X-PSI to account for the initial prior volume that is smaller than unity as explained in Riley (2019) and in Appendix B. The SE′ value is about 6.9 times higher than the SE value for all the models in this paper.

- 17

However, we checked that reanalyzing the old data with the new model choices does not significantly affect the results.

- 18

Note that the old joint NICER and XMM-Newton results had an incorrect BACK_SCAL factor and were obtained by importance sampling from a larger 15% effective-area uncertainty to the 10.4% uncertainty, rather than directly sampling with the 10.4% uncertainty. However, these issues are not expected to have any significant effect on the results (the BACK_SCAL issue was also tested in Riley et al. 2021b, with a low-resolution run).

- 19

Which comes from assuming a 5% uncertainty in the shared scaling factor and a 3% uncertainty in the telescope-specific factors as in the test case of S22.

- 20

Using 30 randomly drawn samples from the equally weighted posterior samples.

- 21

Here we neglected the number of counts from XMM-Newton as it is much smaller than that from NICER.

- 22

- 23

Note that our headline result used SE = 0.01 instead of SE = 0.0001 but also 40,000 live points instead of 4096, leading to roughly 10 times more accepted samples in the highest likelihood parameter space (also across other regions).

- 24

We estimate the expected ranges based on the sample size and 16% and 84% quantiles of the percent point function of the binomial distribution with 68% success rate. When considering many model parameters combined, the range is only indicative since it assumes independence between the parameters, which are instead correlated.